# load our HF API Token

from dotenv import load_dotenv

load_dotenv('./secrets.env')AI at the Gate

Introduction

It’s 2025 and major tech giants are locked in an arm race to AGI. Smaller more capable models are coming up every few weeks.

Gone are the days of figuring out ways to acquire the latest SOTA models’ weight, setting up all the dependencies, getting the right CUDA version, AND THEN finding out if the model actual does what it claims to do in the paper.

Argubly now the skill to master is building model agnostic systems and being able to quickly reinterate them to the latest models.

Luckily HuggingFace is where all the open-sourced AI community host and release their latest models. Just to illustrate, here are a few of the more well known names that’s active in the community:

In this article, we’ll look at 3 different ways to quickly access most of the models on the platform:

- using the Inference API

- calling HuggingFace Space’s API

- using the

transformerLibrary

Method 1 and 2 will be the most straight forward requiring almost zero extra requirements nor expensive hardware.

We will need our HUGGINGFACEHUB_API_TOKEN for the first two example, so we will load it from our secrets.env2 with load_dotenv:

I. The Inference API

since about May 2025, the serverless inference API has been replaced by HF Inference which has less3 available models because they are getting other inference providers involved, which now has billing implication4.

using huggingface_hub.InferenceClient()

For our first example, we are going to test one of the open-sourced model in text-to-image generation as inspired by Simon Willison’s pelicans on bicycle LLM model vibe check. Under the old serverless inference API we could simply use the requests library calling the endpoint URL with a JSON payload but now with inference providers, the construction of those endpoint URLs are a bit more complicated5 but luckily huggingface_hub.InferenceClient() helps simplify things:

import os

from huggingface_hub import InferenceClient

client = InferenceClient(provider = "auto", api_key = os.environ["HUGGINGFACEHUB_API_TOKEN"])

image = client.text_to_image( "pelican on a bicycle vector illustration with mujibvector style, isolated in white background",

model = "mujibanget/vector-illustration")

image- 1

-

for this model you could set

providerto one ofauto,replicate, orfal-ai - 2

- to learn more about this model click here

mujibanget/vector-illustration

using OpenAI compatible endpoint

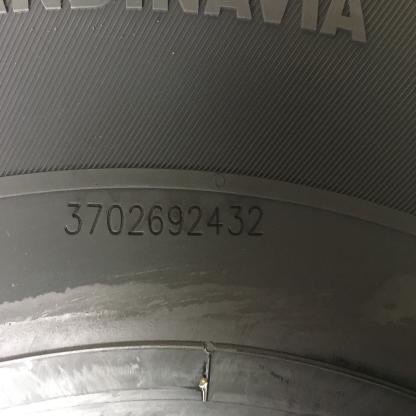

For chat completion models, we can load up HuggingFace’s LLM the same way we call OpenAI’s models. So for this example, let’s test out one of Roboflow’s Vision Checkup dataset’s OCR prompt on Cohere’s Aya-Vision-8B6. Before creating your OpenAI() client object, you will need to check the model’s page for the relavent provider’s API and model name7.

from IPython.display import Image, display

from openai import OpenAI

prompt_im_url = "https://visioncheckup.com/images/compressed/tire.jpeg"

client = OpenAI(

base_url="https://router.huggingface.co/v1",

api_key=os.environ["HUGGINGFACEHUB_API_TOKEN"],

)

completion = client.chat.completions.create(

model="CohereLabs/aya-vision-8b",

messages=[

{

"role": "user",

"content": [

{

"type": "text",

"text": "What is the serial number on the tire? Answer only the serial number."

},

{

"type": "image_url",

"image_url": {

"url": prompt_im_url

}

}

]

}

],

)

display(Image(prompt_im_url))

print(completion.choices[0].message)- 1

-

these endpoint routing is still actively being worked on; before July 21, you needed to call

"https://router.huggingface.co/cohere/compatibility/v1" - 2

-

before July 21, if you want to specify the provider instead of using auto routing you would need to use the model name

"c4ai-aya-vision-8b"with the custom cohere URL in 1, but now you can just add a tag to the model name like"CohereLabs/aya-vision-8b:cohere"

ChatCompletionMessage(content='3702692432', refusal=None, role='assistant', audio=None, function_call=None, tool_calls=None)II. HuggingFace Space’s API

But what about models that do not have inference provider?

For example, google/gemma-3n-E4B-it isn’t deployed by any inference provider as of the writing of this article. However, there are a few spaces running this model and even one written by the HuggingFace team.

Spaces were originally created for the community to demo their project. One of the ways to create spaces is with gradio which is also created by HuggingFace. What sets it apart from other python apps, like Streamlit, is that gradio apps has API and MCP server8 built in! Therefore, you could call most spaces built with gradio as an API.

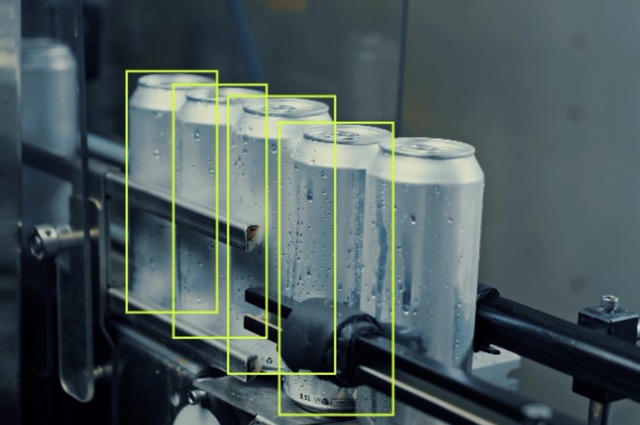

For this example, we are going to use another prompt from VisionCheckup and compare the result against another model that does not have inference provider support: SmolVLM29

from gradio_client import Client, handle_file

prompt_im_url = 'https://visioncheckup.com/images/compressed/missing_annotation.jpeg'

model_zoo = {

"gemma-3n-E4B-it": Client("huggingface-projects/gemma-3n-E4B-it", hf_token = os.environ["HUGGINGFACEHUB_API_TOKEN"]),

"SmolVLM2": Client("Didier/Vision_Language_SmolVLM2")

}

results = {

model_name: client.predict(

message={"text":"How many annotations are missing in the image? Return only a number.",

"files":[handle_file(prompt_im_url)]

},

# system_prompt="You are a helpful assistant.",

# max_new_tokens=700,

api_name="/chat",

) for model_name, client in model_zoo.items()}

display(Image(prompt_im_url))

print(results)- 1

-

hf_tokenis optional here but if you are calling spaces that use ZeroGPU you will be limited to 5 inference minute per day without a PRO account token - 2

-

commenting out

system_promptsince it’s not a parameters for the SmolVLM2 Space API - 3

-

commenting out

max_new_tokenssince it’s not a parameters for the SmolVLM2 Space API

Loaded as API: https://huggingface-projects-gemma-3n-e4b-it.hf.space ✔

Loaded as API: https://didier-vision-language-smolvlm2.hf.space ✔

{'gemma-3n-E4B-it': '1', 'SmolVLM2': ' 4'}III. the transformers library

And finally for those cases where the models are neither supported by inference provider, nor availble in a space, we can load it up in the “traditional” sense using the transformers library

For example, the InternVL3 model supports Chat Completion on Video Inputs. It comes in a variety of model size (with Quantization) and fairly high score on various vision benchmarks. But the model is relatively new10 and released by a small group of chinese researchers at OpenGVLab, so currently there’s no inference provider support and no space demo exists.

But that doesn’t stop us from testing it out locally against one of the videos on CameraBench11 Video 1. Here we are experimenting with a prompt to extract enough temporal and visual features for downstream classification task12.

Code

%%time

from transformers import AutoProcessor, AutoModelForImageTextToText

import torch

from IPython.display import Video

torch_device = "mps"

model_checkpoint = "OpenGVLab/InternVL3-1B-hf"

processor = AutoProcessor.from_pretrained(model_checkpoint)

model = AutoModelForImageTextToText.from_pretrained(model_checkpoint, device_map=torch_device, torch_dtype=torch.bfloat16)

video_url = "https://huggingface.co/datasets/syCen/CameraBench/resolve/main/videos/-FGVJS3rT80.2.1.mp4"

caption_prompt = """

Describe the main subject(s) and the primary activity or event depicted in this video segment in detail.

Focus on dynamic characteristics, temporal patterns, and visual attributes that would help distinguish this

from similar actions, objects, or events for classification purposes.

Provide a concise yet comprehensive caption that highlights key movements, states, interactions, and any significant changes

in the scene or subjects over time.

For example, include information about the subjects' appearance (color, shape, distinctive markings),

their specific actions (e.g., running, grasping, turning), how they interact with other objects or people,

the sequence of events, and the evolving environment.

Caption:

"""

messages = [

{

"role": "user",

"content": [

{

"type": "video",

"url": video_url,

},

{"type": "text", "text": caption_prompt},

],

}

]

inputs = processor.apply_chat_template(

messages,

add_generation_prompt=True,

tokenize=True,

return_dict=True,

return_tensors="pt",

num_frames = 8

).to(model.device, dtype=torch.bfloat16)

output = model.generate(**inputs, max_new_tokens=500)

decoded_output = processor.decode(output[0, inputs["input_ids"].shape[1] :], skip_special_tokens=True)

display(Video(video_url, width=500))

print( f'{model_checkpoint} output: {decoded_output}')- 1

-

you will need to

pip install avwhich is PyAV - 2

-

change this to

cpuif you don’t have a Apple Sillicon chip orcudaif you got one

Setting `pad_token_id` to `eos_token_id`:151645 for open-end generation.OpenGVLab/InternVL3-1B-hf output: The video begins with a view from inside a spacecraft, looking out towards Earth. The spacecraft's circular structure is prominently visible, with a large circular opening at the top. The Earth is seen in the background, with its blue oceans and scattered white clouds. The spacecraft's surface has a black and white striped pattern. As the video progresses, the spacecraft moves closer to the Earth, and the opening at the top of the structure becomes more visible. The spacecraft continues to ascend, and the opening gradually becomes more circular. The Earth remains in the background, and the spacecraft's surface continues to show the black and white striped pattern. The video concludes with the spacecraft nearing the Earth, with the opening at the top of the structure becoming more circular.

CPU times: user 7.36 s, sys: 19.1 s, total: 26.4 s

Wall time: 46.1 smake your own Space

but since making the model run is so simple, why not make your own space so that the model can be hosted on HuggingFace’s infrastructure for “free”13?

For models with utility, it might make sense to just write the code once and then have it be always available in the “cloud”. For example, our SAM2 Space provides Image and Video inference running on ZeroGPU14. As a bonus, building gradio apps are actually quite simple, the docs are well written, and there’s even an interface for loading any model or space directly into the space you are trying to create. Talk about standing on shoulders of giants!

Others in the community have also found creative ways to use Spaces, for example:

- Deploying a FastAPI app via Docker, like this space that’s basically an ImageBind Video Embedding API15

- Deploying n8n on Huggingface Spaces with example code and literally lots of people doing it

But that’s a topic for another day…

Footnotes

while all models are openly accessible on HuggingFace, some models are gated and requires you to agree to their terms and conditions. This example screen is for access to

CohereLabs/aya-vision-8b↩︎your

secrets.envshould look like this:↩︎HUGGINGFACEHUB_API_TOKEN=yyyyy LANGSMITH_API_KEY=zzzzz OpenRouter_key=xxxxxin the long run more models will be available as more providers take on the task of hosting models instead of HuggingFace hosting everything themselves; currently there are about 46k models available with inference provider support.↩︎

see their blog post on the release here for more details, PRO users get $2 inference credit per month and HF inference still seems to be free. Read the official doc on pricing details.↩︎

under serverless inference API the endpoint nomenclature is simply

https://api-inference.huggingface.co/models/{model_name}but now with inference provider, different provider have slightly different endpoint nomenclature. For the example model below, using Replicate the endpoint ishttps://router.huggingface.co/replicate/v1/models/black-forest-labs/flux-dev-lora/predictionsbut using fal it ishttps://router.huggingface.co/fal-ai/fal-ai/flux-lora. The official doc actually have a discussion on how to choose the best approach to call these models.↩︎some models like this one are “gated” meaning that it’s publicly accessible but you must first agree to the terms and conditions on HuggingFace prior to access per Figure 1. This particular model is for non-commercial use only and is one of the featured model in this Multimodal OCR demo↩︎

previously using

langchainyou could justfrom langchain_openai import ChatOpenAI chat_model = ChatOpenAI(model="CohereLabs/aya-vision-8b", base_url = "https://api-inference.huggingface.co/v1/", api_key = os.environ['HUGGINGFACEHUB_API_TOKEN'] )which obviously doesn’t work anymore but the latest version of

langchain_huggingfaceplugin might still provide an easy way to load these models↩︎yes this makes HuggingFace Space a “MCP App Store” powered by the community as they call it↩︎

using this space which demos the model variant

HuggingFaceTB/SmolVLM2-2.2B-Instruct↩︎released Apr 2025 and was “#1 Paper of the day”↩︎

there are lots of video dataset on huggingface, this CameraBench was used to validate a Qwen2.5VL model fine tuned for camera motion understanding↩︎

a June 2025 study found higher image classification accuracy when combining VLM & LLM and the FlexCap model (March 2024) also propose a captioning first approach then second-stage reasoning task using a LLM. For more, see research notes created in Khoj and NotebookLM↩︎

spaces go to sleep after 48 hours of inactivity but you could easily set up some github action to ping it daily↩︎

ZeroGPU are H200 with 70GB of VRAM, all users get 5 minutes of inference time per day and PROs get 25 minutes. And the quota is on inference, NOT for the space’s uptime (which could practically be indefinite).↩︎

although the built in API and MCP server that comes with any gradio app kinda make this “hack” obsolete↩︎